As summer heat intensifies across the northern hemisphere, we face a grim annual ritual. Public health officials will warn that heat can kill and advise cities and citizens to take precautions, and climate activists will amplify the message—but despite these efforts heat will kill once again.

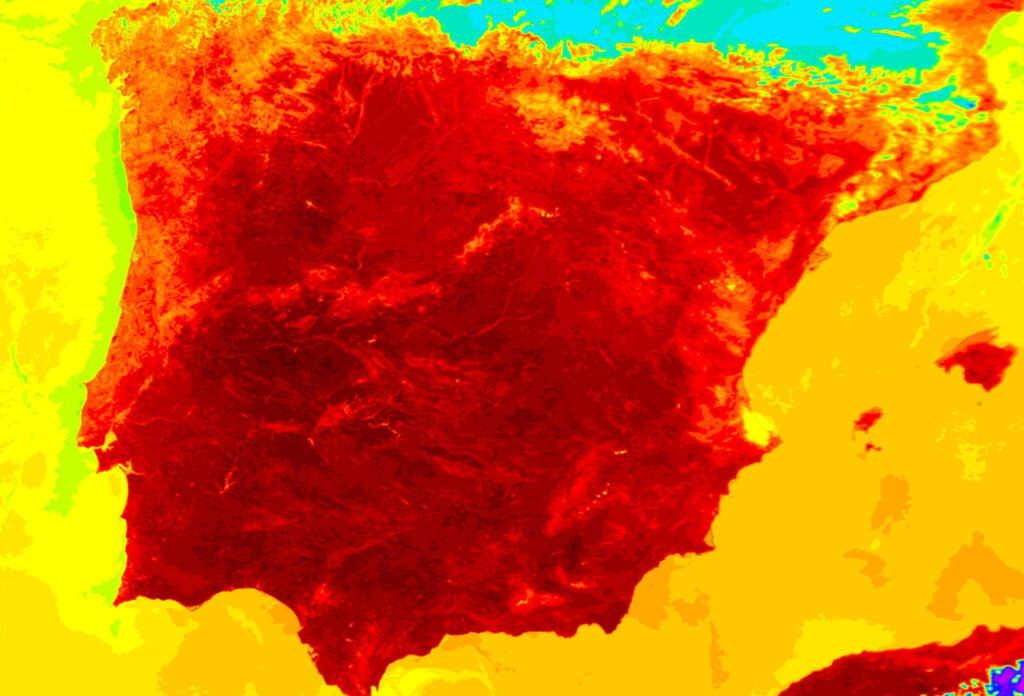

According to the World Meteorological Association, the past eight years were the warmest on record. Heat broke records around the world in summer 2022—first in South Asia and then in North America, Europe, and China—and 2023 is already off to a hot start. Argentina suffered record late-summer heat in March; a heat wave struck India in April; Vietnam, Laos, and Thailand set records in May; the same month, heat left twelve million people under an advisory in the Pacific Northwest and fueled forest fires in Canada. And it is only going to get worse. In March the Intergovernmental Panel on Climate Change predicted that Earth would cross the 1.5°C warming threshold by the early 2030s. Other researchers warned that climate change would cause more frequent heat waves and lead to thousands of deaths. Europe reported over 15,000 heat-related deaths in 2022, and if long-term forecasts hold true, high mortality in more countries is just a matter of time.

The trouble is not ignorance: we know that heat can kill. Humans have recognized the threat for millennia, and over the last two centuries they have scrutinized heat wave mortality to understand who is most at risk and to develop strategies to prevent those deaths. Still people die. Similarly, we have developed strategies that could moderate climate disaster due to global warming, but our fossil fuel emissions continue to rise. The trouble is that too many continue to do nothing in the face of this knowledge. Understanding the history of thinking about heat and heat waves, and recognizing some of the obstacles to action, can help us to identify opportunities and leverage for action in achieving a cooler future.

Human concern with heat is ancient. The Old Testament tells how Manasseh “was overcome by the burning heat” and died while overseeing a barley harvest. Greek philosophers divided the world into a torrid zone straddling the equator, two temperate zones, and two frigid zones. Since the torrid zone was thought too hot for human survival, Greeks could not travel to meet anyone who lived in the southern temperate zone. Ancient physicians believed that human bodies adapted themselves to their environments; if people moved to a new place, the mismatch between body and environment could cause disease, the idea went, so travelers had to adapt their clothing, diet, and activity until they acclimatized.

Such anxieties became a major concern as Europeans set out after Columbus to seize lands worldwide. The tales of conquistadors and colonists are full of heat-related woe. John Winthrop, the first governor of Massachusetts, described lethal heat in June 1637. Returning to Boston from Ipswich, he traveled at night because the heat “was so extreme.” Other English colonists, newly arrived, died. Even after Europeans learned how to live in the Caribbean, Africa, and India, fear of heat was never far from their minds, and as American settlers seized indigenous lands in the Ohio and Mississippi river valleys, medical advice manuals warned of the dangers of exertion during summer heat.

For millennia humans had worried what would happen to their bodies if they moved to new environments. In the nineteenth century, however, they realized that they were changing the environment around them. With the advent of coal-powered industrialization, cities in England filled with smoke; temperature measurements in and around London in the 1810s showed that the city was 2°F warmer than its countryside. This “urban heat island” effect was soon reported across Europe.

Specific places in the built environment were especially dangerous. In July 1878 an Irish cook recently arrived in Boston was found unconscious in her attic apartment with a temperature of 109.2°F; doctors saved her with an ice-water sponge bath. British physiologist J. B. S. Haldane conducted studies, often on himself, in Cornish mines and found that even modest exertion caused overheating. Boiler rooms of steamships could reach 170°F: many stokers fell ill and some even died of heatstroke. Trying to discern the safest way to extract labor from workers in hot conditions, researchers studied the effects of heat, humidity, air flow, exertion, and clothing. They developed new instruments and indices to measure heat stress, including thermometers, hygrometers, and wet-bulb temperature, a technique to gauge evaporative cooling.

By the 1850s deaths from sunstroke appeared regularly in the medical literature. Boston saw a “frightful increase” in its death counts in July 1872, with twenty-eight from sunstroke. A heat wave in 1876 killed 105 people in New York, and heat waves struck Boston again in 1892. The problem was worst in Boston’s laundries and sugar refineries. One victim, brought to Massachusetts General Hospital by Cambridge police, had a temperature of 115°F—the limit of the hospital’s thermometer. Dozens died. The toll was higher at Boston City Hospital, which cared for a poorer and more vulnerable community, people “obliged to work at all times, irrespective of weather and in exposed situations.”

The problems with heat continued for decades. Scorching heat struck the eastern United States in 1901; all told, over 9,500 people might have died. When nearly 400 people died in a Boston heat wave in 1911, physicians identified many predisposing factors, including exertion, pre-existing diseases, “imperfect acclimatization,” and debility (“most prominently alcoholism”). The United States then experienced some of its hottest weather on record in the 1930s. Heat waves contributed to the devastating Dust Bowl; government officials estimated that over 15,000 people died from heat, with nearly 5,000 occurring in 1936 alone, though figuring an exact toll was difficult. Researchers did not use standardized criteria for reporting sunstroke, heatstroke, heat exhaustion, and heat-related deaths. Mary Gover, a statistician with the Public Health Service, tried to decipher heat mortality in 1938. Compiling data from eighty-six U.S. cities, she noticed two interesting effects. First, mortality during a heat wave correlated best not with the peak temperatures but with the deviation from usual temperatures. Second, when two heat waves struck a city in one summer, the second killed fewer people. She did not speculate about why this might be, but both phenomena suggested that humans adapted in some way to heat.

And people could act to protect themselves. When a heat wave struck New York in 1937, eight “aged residents” at the Home of Old Israel died on a single day. After that, the home’s physician implemented reforms. Whenever heat waves began, staff urged a regimen of lighter clothing, less exercise, frequent bathing, a lighter diet, and saltier foods. They monitored residents to ensure that all were sweating normally; anyone who wasn’t was encouraged to drink more. When a heat wave struck again in August 1948, this time more severe than the one eleven years earlier, the city suffered over 1,000 excess deaths—but no one died at the home.

Despite increasing knowledge of the risk and increasing understanding of how to prevent deaths, summer heat continued to kill. The summer of 1966 was especially deadly. St. Louis suffered 500 heat-related deaths. New York suffered 1,000. Heat didn’t just kill directly; it frazzled nerves and increased urban violence. “The oppressiveness of heat waves in cities is emphasized by increased rates of homicide and by clashes with police in the streets,” concluded one report. Other work indicated that the problem may have been worse than initially thought. When a British researcher conducted an “exhaustive analysis” of U.S. data, he argued that it was essential to consider excess deaths, not just those specifically attributed to heat, and doing so raised the toll of heat mortality tenfold. Another researcher argued that it no longer made sense to discuss an urban heat island. The city, instead, had become an “urban death island.”

Other research revealed a puzzling twist: the people at highest risk of heat mortality had changed. Reports earlier in the century had emphasized the risk faced by young adult laborers, but by the 1960s mortality was concentrated in poor and elderly people, many with preexisting conditions and crowded into low-quality housing.

Subscribe to our newsletter to get our latest essays, archival selections, and exclusive editorial content in your inbox.

Thinking about race also shifted. In the scientific racism of the eighteenth and nineteenth centuries, doctors (and slavers) had argued that Africans were uniquely suited for labor in hot climates. (During a 1916 heat wave, for example, doctors in Chicago puzzled over the death of an African American person, finding it “interesting in view of the often expressed view that negroes possess a special immunity against the effects of heat.” ) Prevailing opinion held that European immigrants were at greatest risk. Doctors in Boston were especially concerned about Irish immigrants but were not sure about the source of their risk. As two researchers wrote in 1933, while “a marked racial susceptibility may exist,” the truth was “obscured” by other factors, including their work as unskilled laborers, their “habits of eating and drinking,” and their long habitation in the mild Irish climate. But by the 1960s, thinking had changed. Heat wave mortality in St. Louis in 1966 was higher in African Americans. Researchers again acknowledged that they could not tell whether the explanation was biological or social. “This does not constitute proof, however, that the nonwhite individual may be less tolerant to heat,” one study concluded. “Overcrowding, poorer housing conditions, lower economic status, and poorer general health might also contribute to the observed differences.”

Heat waves in the 1960s also revealed the Faustian bargain of air conditioning. AC was a boon for people who could afford it. While Los Angeles suffered many heat-related deaths in 1963, the toll was far lower than would have been predicted based on the temperatures, evidently because many people had used AC. But many people could not afford it. Moreover, since AC units are inevitably inefficient, their net impact increases overall heat in the local environment, with unfair consequences. As one researcher explained in 1972, “this will also increase thermal stresses on the inhabitants of the central city not fortunate enough to have air conditioning.”

The summer of 1966 reminded health officials that heat waves “are not a thing of the past, but a recurrent meteorological fact.” The continuing deaths demonstrated “our failure to develop effective preventive measures for some heat-susceptible segments of our urban populations.” Researchers hoped that improving knowledge of risk would provide “clues to the possible prevention of such deaths.” But good intentions did not solve the problem. A July 1980 heat wave shattered past records; over 10,000 people died. Memphis, St. Louis, and Kansas City were hit hard. Reports emphasized familiar risk factors—age, poverty, preexisting conditions, and race (“the inter-city black elderly”). Memphis officials attributed the race disparity to “poor housing; in particular, lack of air-conditioning and adequate ventilation.”

The U.S. Senate’s Special Committee on Aging held hearings that month. As Senator Lawton Chiles explained in his opening statement, “The fact that the heat has caused the deaths of 1,200 persons is shocking.” Senators were outraged by the plight of elderly people. They heard testimony about cash-strapped elders forced to choose between electricity, food, or medicines: “Some actually are reluctant to plug in their fans for fear of increasing their bills. They don’t plug in, and sometimes it costs them their lives.” Other elders worried about crime. Senator Jim Sasser related a “particularly tragic” story: “An elderly woman, living in a Memphis housing project, was robbed of $91 one day during the heat crisis. The next night because of fear of robbers and burglars she kept her windows closed and she died during the night, apparently because of the heat.”

A follow-up hearing in 1983 condemned how unprepared the United States seemed. As far as the senators could tell, “No planning for heat waves took place in advance of the summer of 1980.” Their explanation is stunning: “The Nation was taken by surprise. Unaware of the environmental danger, uninformed about the special vulnerability of the elderly, and lacking specific emergency plans, State and local governments could not deal effectively with the crisis. By the time authorities realized the magnitude of the problem, thousands had died.” It is baffling, though, that anyone in 1980 could have been stunned: heat wave mortality had been a fixture of American summers for a century.

The 1980 heat wave did prompt some action. Memphis established a sentinel system in emergency departments to detect increases in heat-related illnesses and established a twenty-four-hour hotline that residents could call. Officials issued advisories through print and broadcast media during heat waves about the importance of decreasing activity, increasing fluids, and seeking medical care promptly. Inspectors visited high-risk sites, including nursing homes. Citizens were encouraged to check in with elderly relatives, friends, and neighbors. Officials reached out to the highest-risk people through anyone who might have a connection, including visiting nurses, Meals on Wheels providers, or postal carriers. After implementing this system, Memphis averaged just two heat deaths per year.

In June 1981 the Centers for Disease Control (CDC) published the first of what would become a nearly annual ritual of warning about summer heat. The note, in Morbidity and Mortality Weekly Report, reviewed the 1980 heat wave deaths in St. Louis and Kansas City. It discussed risk factors—including low socioeconomic status, “race other than white,” alcoholism, mental illness, lack of AC, lack of shade, and living on the upper floors of a building—and recommended targeting preventive efforts on urban areas, noting that air-conditioned shelters existed but had been under-utilized. It reiterated these messages in 1982. The CDC also worked to create a better system, the “Summer Mortality Surveillance Project,” to track heat threats by collecting data from medical examiners. By 1983 sixteen cities participated in the program. With better, more comprehensive tracking and reporting, the hope was, cities might be more prepared should a heat wave strike in the future.

More reports followed regularly—in 1984 (St. Louis, Georgia, and New York), 1989 (Missouri), 1993 (the United States), and 1994 (Philadelphia). The CDC’s 1989 review of natural disasters noted that even if heat waves lacked the dramatic power of earthquakes, hurricanes, or tornados, they were more lethal than all the others. In the summer of 1995 the CDC published still another warning about summer heat. The United States suffered at least 240 heat-related deaths a year, but in years when heat waves struck, the report noted, the toll was far higher. The report highlighted the usual risk groups and recommended the usual preventive measures. Just two weeks after the report a disastrous heat wave hit Chicago; the heat index peaked at 119°F on July 13, and deaths jumped from 49 on July 14 to 162 the following day. The Cook County morgue, overwhelmed, stored bodies in refrigerated trucks, fueling a media spectacle. When the CDC issued its preliminary analysis in August, it seemed frustrated: “Heat-related mortality is preventable,” it insisted. Yet Chicago’s leaders—amazingly—claimed that they had not been aware. “’What we’ve not appreciated before,” said the city’s deputy health commissioner, “is that heat can kill.’”

The commissioner’s surprise should boggle the mind—all the more so because by 1995, global warming had become a growing concern. Scientists had first hypothesized about the “greenhouse effect” in the nineteenth century; in the 1980s many became confident that human-caused global warming was real. When James Hansen warned the Senate in 1986 that significant warming could occur within five to fifteen years, the Senate requested that the Environmental Protection Agency (EPA) investigate. The agency’s 1989 report delivered a dire forecast: global warming would soon cause severe disruptions to human societies. Human health would suffer. Scientists described several pathways that linked global warming to increased mortality, from the spread of malaria to disruptions of the food supply. Extreme heat figured prominently. One study modeled the impact of heat in a warming world and concluded that if carbon dioxide levels doubled, then heat-related mortality in fifteen major U.S. cities would increase nearly sevenfold, from 1,156 to 7,402 deaths annually.

When the CDC published its warning about heat-related mortality in June 1989, it followed its familiar script but added a new comment: “Growing scientific and public concern about the potential for global warming due to the ‘greenhouse effect’ has focused attention on the health effects of heat during the summer,” they wrote. But this concern soon faded from view. The center’s June 1995 warning about summer heat did not mention global warming, nor did its initial analysis of the Chicago heat wave mortality, its definitive analysis that followed in 1996, or the editorial that accompanied it. (I had been following the story closely and pointed out that the CDC’s analysis left out some well-known risk factors and ignored global warming. The authors addressed my questions about race and poverty but said nothing about the climate crisis. )

In the aftermath of the Chicago heat wave, the CDC continued to issue its nearly annual warmings. Ten of these, from 1997 through 2013, said nothing about the looming climate crisis. Just two did. A July 2001 report about heat mortality in Los Angeles alluded indirectly to global warming, warning of the potential for an increase in deaths from “more periods of extreme heat in future summers.” A 2013 report about heat in New York City warned of the “longer and hotter heat waves projected into the next century and beyond.”

In 2016 the CDC teamed up with the EPA to publish a definitive statement. The message was simple: heat killed, it would only get worse, and action had to be taken. “More extreme heat will likely lead to an increase in heat-related illnesses and deaths, especially if people and communities don’t take steps to adapt and protect themselves,” the authors warned.

The seven years since this ominous warning have been the hottest on record. When a “heat dome” settled over the Pacific Northwest in June 2021, Portland reached 116°F, 42°F hotter than normal. Heat-related illnesses surged in Oregon and Washington, with over 1,000 cases recorded in one day alone. While one estimate reported 183 deaths, another put the count at 600. In its annual heat alert in June 2022 the CDC reported that the United States suffers an average of 702 heat-related deaths a year and over 67,000 heat-related emergency department visits. This is surely an undercount, in part because the COVID-19 pandemic has confounded any estimate of “excess deaths.”

When heat arrived in 2022, the world’s media paid close attention. Yet even with heightened attention, is action likely? Health officials have long known about the problem, who is at risk, and what can be done, while repeatedly claiming surprise in the wake of heat-related deaths. Several factors are likely at work in explaining this situation.

One is that heat-related mortality is still a small cause of death in the United States. The count has increased from 200 heat-related deaths a year in 1981 to over 700 now. Adjusting for population growth, the mortality rate has doubled, and experts have repeatedly suggested that the actual count might be ten times higher. But even if heat causes 7,000 deaths, that’s still just 1 percent of the mortality caused by heart disease. A skeptic might argue that the problem doesn’t deserve more attention. A worrier might respond that we should intervene before things get far worse—as they have in Europe. The great achievements of public health, from urban sanitation to vaccination, have come from planning, investment, and prevention. It would be far better to preempt heat-related mortality than to wait until its growing toll becomes intolerable.

A second factor is that most U.S. heat deaths have been concentrated in poor, minoritized, and marginalized communities—the result of inadequate housing, no AC, insufficient green space, and other conditions of poverty. This marginalization of risk plays out on the global stage as well. The United States has produced the greatest share of global emissions but is not the country at highest risk of heat; instead, the greatest threat is faced in South Asia, Africa, and the Middle East. Countries in Africa, which have produced only 3 percent of human CO2 emissions, face a 118-fold increase in the risk of extreme heat. Heat mortality might play out on the margins of global consciousness, in countries ill-equipped to intervene.

A third issue has been the temptation to explain away heat-related deaths as unimportant. When over 700 people died in Chicago in 1995, the city’s mayor dismissed the medical examiner’s estimate: “By Donoghue’s count, Daley remarked, ‘everyone in the summer that dies will die of heat.’” More serious observers have raised a subtler question. People who die in heat waves are often elderly and sick, people who might have died soon anyway. Maybe the heat didn’t really kill them—it just accelerated their impending demise. This phenomenon, variously named “anticipation,” “displacement,” or “harvesting,” predicts that the spike in mortality seen during a heat wave, when vulnerable people die, will generate a mortality deficit in the weeks that follow. This morbid line of thought has been a common way of dismissing deaths whenever the elderly or disabled are most at risk. It appeared repeatedly during the COVID-19 pandemic, with skeptical observers arguing that COVID-19 simply hastened the death of people already on death’s door. Some politicians even argued that the elderly could be sacrificed if needed to keep the economy running.

Does “anticipation” actually happen? Studies have been inconsistent. An initial analysis of mortality in St. Louis in 1966 found no evidence. A 1993 reanalysis of 1966 data found that displacement accounted for 40 percent of the deaths in NYC in 1966 and 19 percent of the deaths in St. Louis. A study of the Chicago heat wave noted that while displacement explained 26 percent of the deaths, the effect was less pronounced for African Americans. An analysis of 3,096 excess deaths in nine French cities in 2003 concluded that only 253 of those reflected harvesting. While the magnitude of displacement remains unclear, social scientists have been critical of the concept. Writing about the French heat wave in 2003, historian Richard Keller argued that officials invoked displacement to absolve themselves of responsibility for the mortality: “the harvesting concept is more exculpatory than explanatory, amounting to a dismissal of life on the margins rather than a clear picture of disaster’s effects on mortality,” he wrote.

A final problem may be faith in societies’ ability to adapt. Air conditioning works wonders, allowing millions to live comfortably in hot climates—if you have access. The high mortality suffered in Europe in 2022 might reflect the low prevalence of AC there: just 5 percent of households in France have air conditioning, compared to nearly 90 percent in the United States. Many may reasonably call for access to air conditioning—ideally powered by green electricity—to be expanded. But is this realistic? A 2019 estimate found that there are a billion single-room air conditioners at work globally today; this could increase to 4.5 billion by 2050, and at that point AC would consume 13 percent of the world’s electricity. And we cannot escape the laws of thermodynamics: AC leads to a net increase in local temperatures. What would a world of ubiquitous AC involve? A futurist might imagine humans living in domed cities, with comfortably cool interiors, while Earth outside bakes under anthropogenic heat.

But air conditioning isn’t essential, some point out: humans can acclimate to heat without it. India’s low reported mortality in 2022 offered hope. “Much of the equatorial band of the planet is already ‘acclimatized’—both culturally and, to some degree, physically—to temperatures that would in the northern latitudes produce widespread heat mortality,” wrote David Wallace-Wells for the New York Times. It is possible, if optimistic, to think that the gradual pace of global warming will give human populations time to adapt, but there are limits. Scientists fear that if heat and humidity reach a certain threshold—a “wet bulb temperature” of 95°F—human bodies will be unable to radiate the heat generated by their own metabolic activity; death could follow within 24 hours. That threshold has been breached, briefly, fourteen times, in the Middle East and South Asia. Delhi came close (92.7°F) in June 2022, and the threshold will be hit more as Earth warms. Where this happens, humans will not be able to survive without AC.

Heat-related mortality in the United States remains low, but it will surely rise. We must do more than hang our hopes on acclimatization or AC. Countries could take decisive action to slash emissions, invest in carbon capture, and moderate the harm we have caused to the environment. Architects could design buildings that do not rely on AC. Cities could continue to design proactive heat response plans, and—like Memphis—actually implement them. And they could commit to deep investments in urban infrastructure, such as green roofs, cool pavements, and more trees and vegetation. But will they?

Most governments have not yet done enough. Knowledge of the problem and its possible solutions has not yet motivated action. Instead, human societies have repeatedly forgotten about the threat of heat and been surprised when its strikes. Ongoing research is of course needed, to characterize how heat mortality changes in a warming world. Social movements can take this knowledge, refract it through the lens of environmental justice, and demand that officials act. The obstacles, however, are daunting. What will it take to motivate massive investments? There are precedents. Most cities have invested in sewers and clean water; many have invested in public transportation. We need to summon the same wisdom to invest in public health and prevention to cool our cities before many more die.

Editors’ Note: For a fully footnoted version of this article, with complete references, access a version at the author’s website here.

We’re interested in what you think. Submit a letter to the editors at letters@bostonreview.net. Boston Review is nonprofit, paywall-free, and reader-funded. To support work like this, please donate here.