Artificial Intelligence (AI) is not likely to make humans redundant. Nor will it create superintelligence anytime soon. But like it or not, AI technologies and intelligent systems will make huge advances in the next two decades—revolutionizing medicine, entertainment, and transport; transforming jobs and markets; enabling many new products and tools; and vastly increasing the amount of information that governments and companies have about individuals. Should we cherish and look forward to these developments, or fear them?

There are reasons to be concerned. Current AI research is too narrowly focused on making advances in a limited set of domains and pays insufficient attention to its disruptive effects on the very fabric of society. If AI technology continues to develop along its current path, it is likely to create social upheaval for at least two reasons. For one, AI will affect the future of jobs. Our current trajectory automates work to an excessive degree while refusing to invest in human productivity; further advances will displace workers and fail to create new opportunities (and, in the process, miss out on AI’s full potential to enhance productivity). For another, AI may undermine democracy and individual freedoms.

Each of these directions is alarming, and the two together are ominous. Shared prosperity and democratic political participation do not just critically reinforce each other: they are the two backbones of our modern society. Worse still, the weakening of democracy makes formulating solutions to the adverse labor market and distributional effects of AI much more difficult. These dangers have only multiplied during the COVID-19 crisis. Lockdowns, social distancing, and workers’ vulnerability to the virus have given an additional boost to the drive for automation, with the majority of U.S. businesses reporting plans for more automation.

None of this is inevitable, however. The direction of AI development is not preordained. It can be altered to increase human productivity, create jobs and shared prosperity, and protect and bolster democratic freedoms—if we modify our approach. In order to redirect AI research toward a more productive path, we need to look at AI funding and regulation, the norms and priorities of AI researchers, and the societal oversight guiding these technologies and their applications.

Our modern compact

The postwar era witnessed a bewildering array of social and economic changes. Many social scientists in the first half of the twentieth century predicted that modern economies would lead to rising inequality and discontent, ultimately degenerating into various types of authoritarian governments or endless chaos.

The events of the interwar years seemed to confirm these gloomy forecasts. But in postwar Western Europe and North America—and several other parts of the globe that adopted similar economic and political institutions—the tide turned. After 1945 industrialized nations came to experience some of their best decades in terms of economic growth and social cohesion—what the French called Les Trente Glorieuses, the thirty glorious years. And that growth was not only rapid but also broadly shared. Over the first three decades after World War II, wages grew rapidly for all workers in the United States, regardless of education, gender, age, or race. Though this era was not without its political problems (it coincided with civil rights struggles in the United States), democratic politics worked: there was quite a bit of bipartisanship when it came to legislation, and Americans felt that they had a voice in politics. These two aspects of the postwar era were critical for social peace—a large fraction of the population understood that they were benefiting from the economic system and felt they had a voice in how they were governed.

How did this relative harmony come about? Much of the credit goes to the trajectory of technological progress. The great economist John Maynard Keynes, who recognized the fragility of social peace in the face of economic hardship more astutely than most others, famously predicted in 1929 that economic growth would create increasing joblessness in the twentieth century. Keynes understood that there were tremendous opportunities for industrial automation—replacing human workers with machines—and concluded that declining demand for human labor was an ineluctable consequence of technological progress. As he put it: “We are being afflicted with a new disease of which . . . [readers] . . . will hear a great deal in the years to come—namely, technological unemployment.”

Yet the technologies of the next half century turned out to be rather different from what Keynes had forecast. Demand for human labor grew and then grew some more. Keynes wasn’t wrong about the forces of automation; mechanization of agriculture—substituting harvesters and tractors for human labor—caused massive dislocation and displacement for almost half of the workforce in the United States. Crucially, however, mechanization was accompanied by the introduction of new tasks, functions, and activities for humans. Agricultural mechanization was followed by rapid industrial automation, but this too was counterbalanced by other technological advances that created new tasks for workers. Today the majority of the workforce in all industrialized nations engages in tasks that did not exist when Keynes was writing (think of all the tasks involved in modern education, health care, communication, entertainment, back-office work, design, technical work on factory floors, and almost all of the service sector). Had it not been for these new tasks, Keynes would have been right. They not only spawned plentiful jobs but also generated demand for a diverse set of skills, underpinning the shared nature of modern economic growth.

Labor market institutions—such as minimum wages, collective bargaining, and regulations introducing worker protection—greatly contributed to shared prosperity. But without the more human-friendly aspects of technological change, they would not have generated broad-based wage growth. If there were rapid advances in automation technology and no other technologies generating employment opportunities for most workers, minimum wages and collective wage demands would have been met with yet more automation. However, when these institutional arrangements protecting and empowering workers coexist with technological changes increasing worker productivity, they encourage the creation of “good jobs”—secure jobs with high wages. It makes sense to build long-term relationships with workers and pay them high wages when they are rapidly becoming more productive. It also makes sense to create good jobs and invest in worker productivity when labor market institutions rule out the low-wage path. Hence, technologies boosting human productivity and labor market institutions protecting workers were mutually self-reinforcing.

Indeed, good jobs became a mainstay of many postwar economies, and one of the key reasons that millions of people felt they were getting their fair share from the growth process—even if their bosses and some businessmen were becoming fabulously rich in the process.

Why was technology fueling wage growth? Why didn’t it just automate jobs? Why was there a slew of new tasks and activities for workers, bolstering wage and employment growth? We don’t know for sure. Existing evidence suggests a number of factors that may have helped boost the demand for human labor. In the decades following World War II, U.S. businesses operated in a broadly competitive environment. The biggest conglomerates of the early twentieth century had been broken up by Progressive Era reforms, and those that became dominant in the second half of the century, such as AT&T, faced similar antitrust action. This competitive environment produced a ferocious appetite for new technologies, including those that raised worker productivity.

These productivity enhancements created just the type of advantage firms were pining for in order to surge ahead of their rivals. Technology was not a gift from the heavens, of course. Businesses invested heavily in technology and they benefited from government support. It wasn’t just the eager investments in higher education during the Sputnik era (lest the United States fall behind the Soviet Union). It was also the government’s role as a funding source, major purchaser of new technologies, and director and coordinator for research efforts. Via funding from the National Science Foundation, the National Institutes of Health, research and development tax credits, and perhaps even more importantly the Department of Defense, the government imprinted its long-term perspective on many of the iconic technologies of the era, including the Internet, computers, nanotechnology, biotech, antibiotics, sensors, and aviation technologies.

The United States also became more democratic during this period. Reforms during the Progressive and New Deal Eras reduced the direct control of large corporations and wealthy tycoons over the political process. The direct election of senators, enacted in 1913 in the 17th Amendment, was an important step in this process. Then came the cleaning up of machine politics in many northern cities, a process that took several decades in the first half of the century. Equally important was the civil rights movement, which ended some of the most undemocratic aspects of U.S. politics (even if this is still a work in progress). Of course, there were many fault lines, and not just Black Americans but many groups did not have sufficient voice in politics. All the same, when the political scientist Robert Dahl set out to investigate “who governs” local politics in New Haven, the answer wasn’t an established party or a well-defined elite. Power was pluralistic, and the involvement of regular people in politics was key for the governance of the city.

Democracy and shared prosperity thus bolstered each other during this epoch. Democratic politics strengthened labor market institutions protecting workers and efforts to increase worker productivity, while shared prosperity simultaneously increased the legitimacy of the democratic system. And this trend was robust: despite myriad cultural and institutional differences, Western Europe, Canada, and Japan followed remarkably similar trajectories to that of the United States, based on rapid productivity growth, shared prosperity, and democratic politics.

The world automation is making

We live in a very different world today. Wage growth since the late 1970s has been much slower than during the previous three decades. And this growth has been anything but shared. While wages for workers at the very top of the income distribution—those in the highest tenth percentile of earnings or those with postgraduate degrees—have continued to grow, workers with a high school diploma or less have seen their real earnings fall. Even college graduates have gone through lengthy periods of little real wage growth.

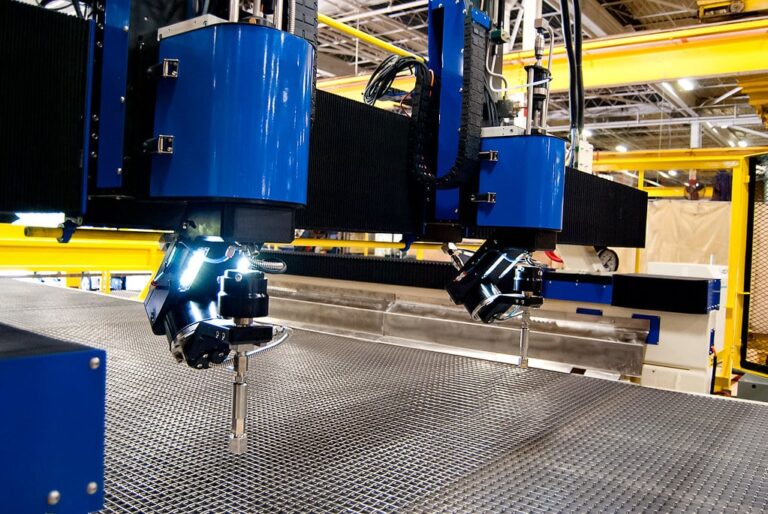

Many factors have played a role in this turnaround. The erosion of the real value of the minimum wage, which has fallen by more than 30 percent since 1968, has been instrumental in the wage declines at the bottom of the distribution. With the disappearance of trade unions from much of the private sector, wages also lagged behind productivity growth. Simultaneously, the enormous increase in trade with China led to the closure of many businesses and large job losses in low-tech manufacturing industries such as textiles, apparel, furniture, and toys. Equally defining has been the new direction of technological progress. While in the four decades after World War II automation and new tasks contributing to labor demand went hand-in-hand, a very different technological tableau began in the 1980s—a lot more automation and a lot less of everything else.

Automation acted as the handmaiden of inequality. New technologies primarily automated the more routine tasks in clerical occupations and on factory floors. This meant the demand and wages of workers specializing in blue-collar jobs and some clerical functions declined. Meanwhile professionals in managerial, engineering, finance, consulting, and design occupations flourished—both because they were essential to the success of new technologies and because they benefited from the automation of tasks that complemented their own work. As automation gathered pace, wage gaps between the top and the bottom of the income distribution magnified.

The causes of this broad pattern—more automation and less effort directed toward increasing worker productivity—are not well understood. To be sure, much of this predates AI. The rapid automation of routine jobs started with applications of computers, databases, and electronic communication in clerical jobs and with numerical control in manufacturing, and it accelerated with the spread of industrial robots. With breakthroughs in digital technologies, automation may have become technologically easier. However, equally (if not more) important are changes in policy and the institutional and policy environments. Government funding for research—especially the type of blue-sky research leading to the creation of new tasks—dried up. Labor market institutions that pushed for good jobs weakened. A handful of companies with business models focused on automation came to dominate the economy. And government tax policy started favoring capital and automation. Whatever the exact mechanisms, technology became less favorable to labor and more focused on automation.

AI is the next act in this play. The first serious research into AI started in the 1950s, with ambitious and, as it turned out, unrealistic goals. The AI pioneers keenly understood the power of computation and thought that creating intelligent machines was a challenging but achievable aspiration. Two of the early luminaries, Herbert Simon and Allen Newell, said in 1957 that “there are now in the world machines that think, that learn and that create. Moreover, their ability to do these things is going to increase rapidly until—in a visible future—the range of problems they can handle will be coextensive with the range to which the human mind has been applied.” But these hopes were soon dashed. It was one thing to program binary operations for fast computation—a task at which machines had well exceeded human capacities by the early 1950s. It was something completely different to have machines perform more complex tasks, including image recognition, classification, language processing, reasoning, and problem solving. The euphoria and funding for the field dwindled, and AI winter(s) followed.

In the 1990s AI won renewed enthusiasm, albeit with altered ambitions. Rather than having machines think and understand exactly like humans, the new aim was to use cheaply available computation power and the vast amounts of data collected by sensors, present in books and online, and voluntarily given by individuals. The breakthrough came when we figured out how to turn many of the services we wanted into prediction problems. Modern statistical methods, including various types of machine learning, could be applied to the newly available, large, unstructured datasets to perform prediction-based tasks cheaply and effectively. This meant that the path of least resistance for the economic applications of AI was (algorithmic) automation—adapting pattern recognition, object classification, and statistical prediction into applications that could take over many of the repetitive and simple cognitive tasks millions of workers were performing.

What started as an early trend became the norm. Many experts now forecast that the majority of occupations will be fundamentally affected by AI in the decades to come. AI will also replace more skilled tasks, especially in accounting, finance, medical diagnosis, and mid-level management. Nevertheless, current AI applications are still primarily replacing relatively simple tasks performed by low-wage workers.

The state of democracy and liberty

Alongside this story of economic woes, democracy hasn’t fared too well, either—across both the developed and the developing world. Three factors have been especially important for U.S. democracy over the last thirty years.

First, polarization has increased considerably. In the decades that followed World War II, U.S. lawmakers frequently crossed the aisle and supported bills from the other party. This rarely occurs today. Polarization makes effective policy-making much harder—legislation to deal with urgent challenges becomes harder to pass and, when it does pass, it lacks the necessary legitimacy. This was painfully obvious, for example, in the efforts to enact a comprehensive health care reform to control rising costs and provide coverage to millions of Americans who did not have access to health insurance.

Second, the traditional media model, with trusted and mostly balanced sources, came undone. Cable news networks and online news sources have rendered the electorate more polarized and less willing to listen to counterarguments, making democratic discourse and bipartisan policy-making even more difficult.

Third, the role of money in politics has increased by leaps and bounds. As political scientists Larry Bartels and Martin Gilens have documented, even before the fateful Supreme Court decision on Citizens United in 2010 opened the floodgates to corporate money, the richest Americans and the largest corporations had become disproportionately influential in shaping policy via lobbying efforts, campaign contributions, their outsized social status, and their close connections with politicians.

AI only magnified these fault lines. Though we are still very much in the early stages of the digital remaking of our politics and society, we can already see some of the consequences. AI-powered social media, including Facebook and Twitter, have already completely transformed political communication and debate. AI has enabled these platforms to target users with individualized messages and advertising. Even more ominously, social media has facilitated the spread of disinformation—contributing to polarization, lack of trust in institutions, and political rancor. The Cambridge Analytica scandal illustrates the dangers. The company acquired the private information of about 50 million individuals from data shared by around 270,000 Facebook users. It then used these data to design personalized and targeted political advertising in the Brexit referendum and the 2016 U.S. presidential election. Many more companies are now engaged in similar activities, with more sophisticated AI tools. Moreover, recent research suggests that standard algorithms used by social media sites such as Facebook reduce users’ exposure to posts from different points of view, further contributing to the polarization of the U.S. public.

Other emerging applications of AI may be even more threatening to democracy and liberty around the world. Basic pattern recognition techniques are already powerful enough to enable governments and companies to monitor individual behavior, political views, and communication. For example, the Chinese Communist Party has long relied on these technologies for identifying and stamping out online dissent and opposition, for mass surveillance, and for controlling political activity in parts of the country where there is widespread opposition to its rule (such as Xinjiang and Tibet). As Edward Snowden’s revelations laid bare, the U.S. government eagerly used similar techniques to collect massive amounts of data from the communications of both foreigners and American citizens. Spyware programs—such as Pegasus, developed by the Israeli firm NSO Group, and the Da Vinci and Galileo platforms of the Italian company Hacking Team—enable users to take control of the data of individuals thousands of miles away, break encryption, and remotely track private communications. Future AI capabilities will go far beyond these activities.

Another area of considerable concern is facial recognition, currently one of the most active fields of research within AI. Though facial recognition technology has legitimate uses in personal security and defense against terrorism, its commercial applications remain unproven. Much of the demand for this technology originates from mass surveillance programs.

With AI-powered technologies already able to collect information about individual behavior, track communications, and recognize faces and voices, it is not far-fetched to imagine that many governments will be better positioned to control dissent and discourage opposition. But the effects of these technologies may well go beyond silencing governments’ most vocal critics. With the knowledge that such technologies are monitoring their every behavior, individuals will be discouraged from voicing criticism and may gradually reduce their participation in civic organizations and political activity. And with the increasing use of AI in military technologies, governments may be further empowered to act (even more) despotically toward their own citizens—as well as more aggressively toward external foes.

Individual dissent is the mainstay of democracy and social liberty, so these potential developments and uses of AI technology should alarm us all.

The AI road not taken

Much of the diagnosis I have presented thus far is not new. Many decry the disruption that automation has already produced and is likely to cause in the future. Many are also concerned about the deleterious effects new technologies might have on individual liberty and democratic procedure. But the majority of these commentators view such concerns with a sense of inevitability—they believe that it is in the very nature of AI to accelerate automation and to enable governments and companies to control individuals’ behavior.

Yet society’s march toward joblessness and surveillance is not inevitable. The future of AI is still open and can take us in many different directions. If we end up with powerful tools of surveillance and ubiquitous automation (with not enough tasks left for humans to perform), it will be because we chose that path.

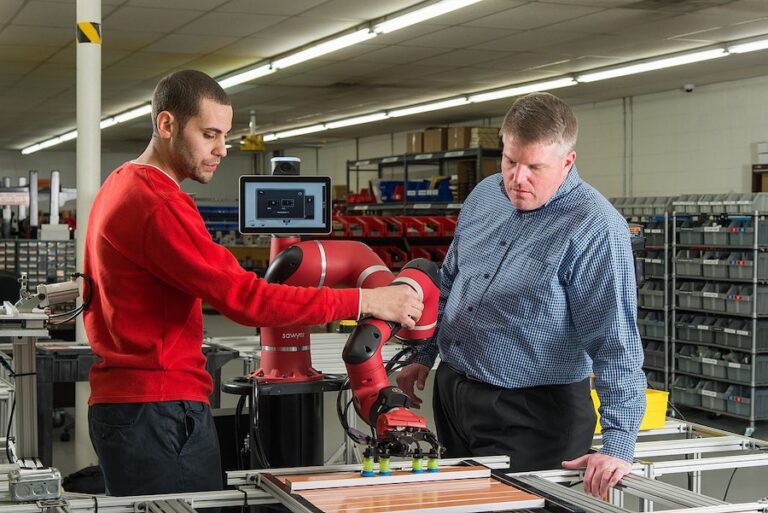

Where else could we go? Even though the majority of AI research has been targeted toward automation in the production domain, there are plenty of new pastures where AI could complement humans. It can increase human productivity most powerfully by creating new tasks and activities for workers.

Let me give a few examples. The first is education, an area where AI has penetrated surprisingly little thus far. Current developments, such as they are, go in the direction of automating teachers —for example, by implementing automated grading or online resources to replace core teaching tasks. But AI could also revolutionize education by empowering teachers to adapt their material to the needs and attitudes of diverse students in real time. We already know that what works for one individual in the classroom may not work for another; different students find different elements of learning challenging. AI in the classroom can make teaching more adaptive and student-centered, generate distinct new teaching tasks, and, in the process, increase the productivity of—and the demand for—teachers.

The situation is very similar in health care, although this field has already witnessed significant AI investment. Up to this point, however, there have been few attempts to use AI to provide new, real-time, adaptive services to patients by nurses, technicians, and doctors. Similarly, AI in the entertainment sector can go a long way toward creating new, productive tasks for workers. Intelligent systems can greatly facilitate human learning and training in most occupations and fields by making adaptive technical and contextual information available on demand. Finally, AI can be combined with augmented and virtual reality to provide new productive opportunities to workers in blue-collar and technical occupations. For example, it can enable them to achieve a higher degree of precision so that they can collaborate with robotics technology and perform integrated design tasks.

In all of these areas, AI can be a powerful tool for deploying the creativity, judgment, and flexibility of humans rather than simply automating their jobs. It can help us protect their privacy and freedom, too. Plenty of academic research shows how emerging technologies— differential privacy, adversarial neural cryptography, secure multi-party computation, and homomorphic encryption, to name a few—can protect privacy and detect security threats and snooping, but this research is still marginal to commercial products and services. There is also growing awareness among both the public and the AI community that new technologies can harm public discourse, freedom, and democracy. In this climate many are demanding a concerted effort to use AI for good. Nevertheless, it is remarkable how much of AI research still focuses on applications that automate jobs and increase the ability of governments and companies to monitor and manipulate individuals. This can and needs to change.

The market illusion

One objection to the argument I have developed is that it is unwise to mess with the market. Who are we to interfere with the innovations and technological breakthroughs the market is generating? Wouldn’t intervening sacrifice productivity growth and even risk our technological vibrancy? Aren’t we better off just letting the market mechanism deliver the best technologies and then use other tools, such as tax-based redistribution or universal basic income, to make sure that everybody benefits?

The answer is no, for several reasons. First, it isn’t clear that the market is doing a great job of selecting the right technologies. It is true that we are in the midst of a period of prodigious technological creativity, with new breakthroughs and applications invented every day. Yet Robert Solow’s thirty-year-old quip about computers—that they are “everywhere but in the productivity statistics”—is even more true today. Despite these mind-boggling inventions, current productivity growth is strikingly slow compared to the decades that followed World War II. This sluggishness is clear from the standard statistic that economists use for measuring how much the technological capability of the economy is expanding—the growth of total factor productivity (TFP). TFP growth answers a simple question: If we kept the amount of labor and capital resources we are using constant from one year to the next, and only our technological capabilities changed, how much would aggregate income grow? TFP growth in much of the industrialized world was rapid during the decades that followed World War II, and has fallen sharply since then. In the US, for example, the average TFP growth was close to 2 percent a year between 1920 and 1970, and has averaged only a little above 0.5 percent a year over the last three decades. So the case that the market is doing a fantastic job of expanding our productive capacity isn’t ironclad.

The argument that we should rely on the market for setting the direction of technological change is weak as well. In the terminology of economics, innovation creates significant positive “externalities”: when a company or a researcher innovates, much of the benefits accrue to others. This is doubly so for technologies that create new tasks. The beneficiaries are often workers whose wages increase (and new firms that later find the right organizational structures and come up with creative products to make use of these new tasks). But these benefits are not part of the calculus of innovating firms and researchers. Ordinary market forces—which fail to take account of externalities—may therefore deter the types of technologies that have the greatest social value.

This same reasoning is even more compelling when new products produce non-economic costs and benefits. Consider surveillance technologies. The demand for surveillance from repressive (and even some democratic-looking) governments may be great, generating plenty of financial incentives for firms and researchers to invest in facial recognition and snooping technologies. But the erosion of liberties is a notable non-economic cost that it is often not taken into account. A similar point holds for automation technologies: it is easy to ignore the vital role that good, secure, and high-paying jobs play in making people feel fulfilled. With all of these externalities, how can we assume that the market will get things right?

Market troubles multiply further still when there are competing technological paradigms, as in the field of AI. When one paradigm is ahead of the others, both researchers and companies are tempted to herd on that leading paradigm, even if another one is more productive. Consequently, when the wrong paradigm surges ahead, it becomes very difficult to switch to more promising alternatives.

Last but certainly not least, innovation responds not just to economic incentives but also to norms. What researchers find acceptable, exciting, and promising is not purely a function of economic reward. Social norms play a key role by shaping researchers’ aims as well as their moral compasses. And if the norms within the research area do not reflect our social objectives, the resulting technological change will not serve society’s best interests.

All of these reasons cast doubt on the wisdom of leaving the market to itself. What’s more, the measures that might be thought to compensate for a market left to itself—redistribution via taxes and the social safety net—are both insufficient and unlikely to work. We certainly need a better safety net. (The COVID-19 pandemic has made that even clearer.) But if we do not generate meaningful employment opportunities—and thus a viable social purpose—for most people in society, how can democracy work? And if democracy doesn’t work, how can we enact such redistributive measures—and how can we be sure that they will remain in place in the future?

Even worse, building shared prosperity based predominantly on redistribution is a fantasy. There is no doubt that redistribution—via a progressive tax system and a robust social safety net—has been an important pillar of shared prosperity in much of the twentieth century (and high-quality public education has been critical). But it has been a supporting pillar, not the main engine of shared prosperity. Jobs, and especially good jobs, have been much more central, bolstered by productivity growth and labor market institutions supporting high wages. We can see this most clearly from the experiences of Nordic countries, where productivity growth, job creation, and shared gains in the labor market have been the bulwark of their social democratic compact. Industry-level wage agreements between trade unions and business associations set an essentially fixed wage for the same job throughout an industry. These collective agreements produced high and broadly equal wages for workers in similar roles. More importantly, the system encouraged productivity growth and the creation of a plentiful supply of good jobs because, with wages largely fixed at the industry level, firms got to keep higher productivity as profits and had strong incentives to innovate and invest.

Who controls AI?

If we are going to redirect intelligent systems research, we first have to understand what determines the current direction of research. Who controls AI?

Of course, nobody single-handedly controls research, and nobody sets the direction of technological change. Nonetheless, compared to many other technological platforms—where we see support and leadership from different government agencies, academic researchers with diverse backgrounds and visions, and scores of research labs pushing in distinct directions—AI influence is concentrated in the hands of a few key players. A handful of tech giants, all focused on algorithmic automation—Google (Alphabet), Facebook, Amazon, Microsoft, Netflix, Ali Baba, and Baidu—account for the majority of money spent on AI research. (According to a recent McKinsey report, they are responsible for about $20 to $30 billion of the $26 to $39 billion in total private AI investment expenditures worldwide.) Government funding pales in comparison.

While hundreds of universities have vibrant, active departments and labs working on AI, machine learning, and big data, funding from major corporations shapes the direction of academic research, too. As government support for academic research has declined, corporations have come to play a more defining role in academic funding. Even more consequential might be the fact that there is now a revolving door between corporations and universities, with major researchers consulting for the private sector and periodically leaving their academic posts to take up positions in technology companies working on AI.

But the situation may actually be even worse, as leading technology companies are setting the agenda of research in three other significant ways. First, via both their academic relations and their philanthropic arms, tech companies are directly influencing the curriculum and AI fields at leading universities. Second, these companies and their charismatic founders and CEOs have loud voices in Washington, D.C., and in the priorities of funding agencies—the agencies that shape academic passions. Third, students wishing to specialize in AI-related fields often aspire to work for one of the major tech companies or for startups that are usually working on technologies that they can sell to these companies. Universities must respond to the priorities of their students, which means they are impelled to nourish connections with major tech companies.

This isn’t to say that thinking in the field of AI is completely uniform. In an area that attracts hundreds of thousands of bright minds, there will always be a diversity of opinions and approaches—and some who are deeply aware that AI research has social consequences and bears a social responsibility. Nevertheless, it is striking how infrequently AI researchers question the emphasis on automation. Too few researchers are using AI to create new jobs for humans or protect individuals from the surveillance of governments and companies.

How to redirect AI

These are troubling trends. But even if have convinced you that we need to redirect AI, how exactly can we do it?

The answer, I believe, lies in developing a three-pronged approach. Government policy, funding, and leadership are critical. These three prongs are well illustrated by past successes in redirecting technological change toward socially beneficial areas. For instance, in the context of energy generation and use, there have been tremendous advances in low- or zero-carbon emission technologies, even if we are still far away from stemming climate change. These advances owe much to three simultaneous and connected developments. First, government policies produced a measurement framework to understand the amount of carbon emitted by different types of activities and determine which technologies were clean. Based on this framework, government policy (at least in some countries) started taxing and limiting carbon emissions. Then, even more consequentially, governments used research funding and intellectual leadership to redirect technological change toward clean sources of energy—such as solar, wind, and geothermal—and innovations directly controlling greenhouse gas emissions. Second, all this coincided with a change in norms. Households became willing to pay more to reduce their own carbon footprint—for example, by purchasing electric vehicles or using clean sources of energy themselves. They also started putting social pressure on others to do the same. Even more consequential was households’ demands that their employers limit pollution. Third, all of this was underpinned by democratic oversight and pressure. Governments acted because voters insisted that they act; companies changed (even if in some instances these changes were illusory) because their employees and customers demanded change and because society at large turned the spotlight on them.

The same three-pronged approach can work in AI: government involvement, norms shifting, and democratic oversight.

First, government policy, funding, and leadership are critical. To begin with, we must remove policy distortions that encourage excessive automation and generate an inflated demand for surveillance technologies. Governments are the most important buyers of AI-based surveillance technologies. Even if it will be difficult to convince many security services to give up on these technologies, democratic oversight can force them to do so. As I already noted, government policy is also fueling the adoption and development of new automation technologies. For example, the U.S. tax code imposes tax rates around 25 percent on labor but less than 5 percent on equipment and software, effectively subsidizing corporations to install machinery and use software to automate work. Removing these distortionary incentives would go some way toward refocusing technological change away from automation. But it won’t be enough. We need a more active government role to support and coordinate research efforts toward the types of technologies that are most socially beneficial and that are most likely to be undersupplied by the market.

As with climate change, such an effort necessitates a clear focus on measuring and determining which types of AI applications are most beneficial. For surveillance and security technologies, it is feasible, if not completely straightforward, to define which technological applications will strengthen the ability of companies and authoritarian governments to snoop on people and manipulate their behavior. It may be harder in the area of automation—how do you distinguish an AI automation application from one that leads to new tasks and activities for humans? For government policy to redirect research, these guidelines need to be in place before the research is undertaken and technologies are adopted. This calls for a better measurement framework—a tall order, but not a hopeless task. Existing theoretical and empirical work on the effects of automation and new tasks shows that they have very distinct effects on the labor share of value added (meaning how much of the value added created by a firm or industry goes to labor). Greater automation reduces the labor share, while new tasks increase it. Measuring the sum of the work-related consequences of new AI technologies via their impact on the labor share is therefore one promising avenue. Based on this measurement framework, policy can support technologies that tend to increase the labor share rather than those boosting profits at the expense of labor.

Second, we must pay attention to norms. In the same way that millions of employees demand that their companies reduce their carbon footprint—or that many nuclear physicists would not be willing to work on developing nuclear weapons—AI researchers should become more aware of, more sensitive to, and more vocal about the social consequences of their actions. But the onus is not just on them. We all need to identify and agree on what types of AI applications contribute to our social ills. A clear consensus on these questions may then trigger self-reinforcing changes in norms as AI researchers and firms feel the social pressure from their families, friends, and society at large.

Third, all of this needs to be embedded in democratic governance. It is easier for the wrong path to persist when decisions are made without transparency and by a small group of companies, leaders, and researchers not held accountable to society. Democratic input and discourse are vital for breaking that cycle.

We are nowhere near a consensus on this, and changes in norms and democratic oversight remain a long way away. Nonetheless, such a transformation is not impossible. We may already be seeing the beginning of a social awakening. For example, NSO Group’s Pegasus technology grabbed headlines when it was used to hack of Amazon founder and owner Jeff Bezos’s phone, monitor Saudi dissidents, and surveil Mexican lawyers, UK-based human rights activists, and Moroccan journalists. The public took note. Public pressure forced Juliette Kayyem—a former Obama administration official, Harvard professor, and senior advisor to the NSO Group—to resign from her position with the spyware company and cancel a webinar on female journalist safety she planned to hold at Harvard. Public pressure also recently convinced IBM, Amazon, and Microsoft to temporarily stop selling facial recognition software to law enforcement because of evidence of these technologies’ racial and gender biases and their use in the tracking and deportation of immigrants. Such social action against prominent companies engaged in dubious practices, and the academics and experts working for them, is still the rare exception. But it can and should happen more often if we want to redirect our efforts toward better AI.

The mountains ahead

Alas, I have to end this essay not with a tone of cautious optimism, but by identifying some formidable challenges that lie ahead. The type of transformation I’m calling for would be difficult at the best of times. But several factors are complicating the situation even further.

For one thing, democratic oversight and changes in societal norms are key for turning around the direction of AI research. But as AI technologies and other social trends weaken democracy, we may find ourselves trapped in a vicious circle. We need a rejuvenation of democracy to get out of our current predicament, but our democracy and tradition of civic action are already impaired and wounded. Another important factor, as I have already mentioned, is that the current pandemic may have significantly accelerated the trend toward greater automation and distrust in democratic institutions.

Finally, and perhaps most important, the international dimension deepens the challenge. Suppose, despite all of the difficulties ahead, there is a U.S. democratic awakening and a consensus emerges around redirecting AI. Even then, hundreds of thousands of researchers in China and other countries can still pursue surveillance and military applications of AI technology and eagerly continue the trend toward automation. Could the United States ignore this international context and set the future direction of AI on its own? Probably not. Any redirection of AI therefore needs to be founded on at least a modicum of international coordination. Unfortunately, the weakening of democratic governance has made international cooperation harder and international organizations even more toothless than they were before.

None of this detracts from the main message of this essay: the direction of future AI and the future health of our economy and democracy are in our hands. We can and must act. But it would be naïve to underestimate the enormous challenges we face.